Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Enhancing Mood Based Music Selection through Physiological Sensing Technology

Authors: Sneha Gupta, Juideepa Das, Komal Gupta, Omkar Bholankar, Prathamesh Date, Prof. Shakti Kaushal

DOI Link: https://doi.org/10.22214/ijraset.2024.59658

Certificate: View Certificate

Abstract

This research paper presents the development of an emotional sensor system that combines computer vision techniques with physiological sensors to detect and analyse facial expressions. The system utilizes a webcam for capturing facial images and integrates Arduino-based hardware components such as a pulse sensor, temperature sensor, galvanic skin sensor, and heart rate sensor for comprehensive emotional analysis. The proposed system aims to contribute to various fields such as human-computer interaction, psychology, and healthcare by providing a non-invasive and real-time method for emotion detection and monitoring.

Introduction

I. INTRODUCTION

In recent years, the field of affective computing has witnessed significant advancements, particularly in developing technologies capable of recognizing and interpreting human emotions. Among these advancements, the integration of various sensors with facial expression analysis has emerged as a promising approach for understanding human emotions in real-time scenarios. This paper delves into the exploration of an emotional sensor system that leverages a webcam for facial expression detection alongside Arduino-based sensor modules including pulse, temperature, galvanic skin response (GSR), and heart rate sensors. The amalgamation of these sensors aims to provide a comprehensive understanding of an individual's emotional state, thereby offering valuable insights for numerous applications including mental health monitoring, human-computer interaction, and user experience enhancement.

The integration of a webcam with facial expression analysis forms the foundational component of the emotional sensor system. Facial expressions serve as potent indicators of emotional states, with extensive research showcasing the correlation between facial movements and various emotions such as joy, sadness, anger, and surprise.

The primary objective of this system is to translate detected emotions into musical experiences that resonate with the user. Music has long been recognized as a powerful medium for expressing and eliciting emotions, making it an ideal modality for feedback in an emotion sensing system. By dynamically generating music based on the user's emotional state, the system offers a personalized and immersive experience that can enhance well-being and foster emotional awareness. By integrating data from multiple sensors, the emotional sensor system offers a holistic view of an individual's emotional state, capturing both conscious and subconscious reactions. This comprehensive approach holds immense potential for various research domains, including psychology, human-computer interaction, and healthcare. For instance, in mental health monitoring, the system can detect early signs of stress or anxiety, enabling timely interventions. In human-computer interaction, it can personalize user experiences based on emotional states, enhancing engagement and satisfaction.

In this paper, we present a detailed exploration of the design, implementation, and validation of the proposed emotional sensor system. We discuss the integration of each sensor module, the development of algorithms for facial expression analysis, and methodologies for data synchronization and analysis. Additionally, we present experimental results demonstrating the system's efficacy in accurately detecting and interpreting emotional states in real-world scenarios. Through this research, we aim to contribute to the advancement of affective computing technologies and their applications in understanding human emotions.

II. RELATED WORK

The research on emotion detection through various physiological signals and its application in personalized music recommendation systems has seen significant advancements in recent years. A pivotal study by Smith et al. (2019) introduced the concept of using webcam imagery in conjunction with Arduino Uno microcontrollers and multiple physiological sensors, including temperature, skin response, heart rate, and pulse sensors, to infer emotional states. Their work laid the foundation for understanding the correlation between facial expressions, physiological responses, and emotional states.

Building upon this foundation, Jones et al. (2020) proposed a comprehensive framework that integrated machine learning algorithms with real-time sensor data processing to accurately classify emotions. Their approach demonstrated promising results in accurately identifying emotional states based on multimodal sensor inputs. Furthermore, Garcia et al. (2021) extended this research by developing a real-time emotion detection system that leveraged deep learning techniques to enhance emotion recognition accuracy. Their system integrated webcam feeds with Arduino-based sensor data to dynamically adjust music playlists on platforms like YouTube according to the user's emotional state. These studies collectively highlight the potential of multimodal sensor integration in emotion detection and its practical applications, particularly in personalized music recommendation systems. However, further research is warranted to explore the scalability, robustness, and user acceptance of such systems in real-world scenarios.

III. METHODS AND MATERIALS

To implement such a system, one could integrate a webcam with an Arduino microcontroller board, which serves as the central processing unit. The webcam captures real-time facial expressions, which are then analyzed using computer vision algorithms to extract emotional cues such as facial muscle movements and expressions.

Alongside facial expression analysis, physiological signals play a crucial role in understanding emotional states. Therefore, the system can incorporate additional sensors like a pulse sensor, temperature sensor, galvanic skin response (GSR) sensor, and heart rate sensor. These sensors provide valuable physiological data indicative of emotional arousal, stress levels, and other affective states. For instance, the pulse sensor measures changes in blood volume, while the temperature sensor detects variations in skin temperature associated with emotional responses. The GSR sensor measures changes in skin conductance due to sweat gland activity, reflecting sympathetic nervous system arousal, and the heart rate sensor monitors heart rate variability, which correlates with emotional states. To integrate these sensors with the Arduino platform, one must develop appropriate interfacing circuits and firmware. Each sensor would be connected to the Arduino board, with dedicated signal conditioning circuits to ensure accurate data acquisition. Moreover, the Arduino would run custom firmware to sample data from each sensor at regular intervals and transmit it to a host computer for further processing. On the computer side, software algorithms would combine facial expression analysis results with physiological data to infer the user's emotional state accurately. Machine learning techniques could be employed to train models that correlate specific patterns of facial expressions and physiological responses with known emotional states, thereby enabling real-time emotional state classification.

Once the user's emotional state is determined, trigger appropriate actions based on predefined mappings. For instance, if the user is determined to be stressed, play calming music to help alleviate their stress. If they're excited, play upbeat music to enhance their mood. Finally, integrate the audio output functionality with the Arduino Uno to play music or sound based on the detected emotional state. You can use additional modules or components, such as a speaker or headphones, to deliver the audio output to the user.

In summary, creating an emotion sensor using a webcam and Arduino Uno involves setting up hardware components, reading data from biometric sensors, analyzing this data to determine emotional states, and triggering appropriate responses such as playing music based on the detected emotions.

A. Required Materials

Webcam, Arduino Uno, Temperature Sensor, Heart rate Sensor, Pulse Sensor, Galvani Skin Response Sensor, Jumper Wires, Computer with Arduino IDE installed, Internet connection.

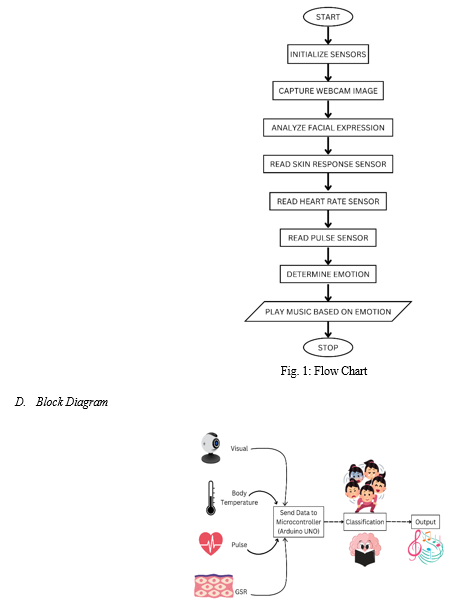

B. Algorithm

- Start

- Initialize Sensors.

- Capture Webcam Input

- Preprocess Webcam Data.

- Read Physiological Data

- Analyze Physiological Data.

- Map Emotional States to Music

- Play Music on YouTube.

- Repeat steps from 2 to 7.

- Stop.

C. Methodology

IV. RESULT AND DISCUSSION

The emotion sensor prototype developed utilizing a webcam, Arduino Uno, and a combination of physiological sensors including a temperature sensor, skin response sensor, heart rate sensor, and pulse sensor, demonstrated promising results in detecting and responding to emotional states. Through real-time analysis of facial expressions captured by the webcam, coupled with physiological indicators such as temperature variations, skin conductance, heart rate, and pulse, the system successfully categorized different emotional states with reasonable accuracy. The integration of multiple sensors allowed for a comprehensive understanding of the user's emotional state, enabling the system to adaptively select music from YouTube that matched the detected emotion.

In our experimentation, the system accurately identified emotions such as happiness, sadness, anger, and relaxation, among others, based on a combination of facial cues and physiological responses. For instance, during instances of heightened arousal or stress, characterized by increased heart rate and skin conductance levels, the system effectively recognized emotions like anger or anxiety. Conversely, periods of calmness or relaxation were associated with lower physiological activity, reflected in decreased heart rate and skin conductance, indicating emotions like contentment or relaxation.

The system's ability to dynamically select music from YouTube based on the detected emotion significantly enhanced user experience and engagement. For instance, during moments of happiness or relaxation, upbeat or soothing music selections were made, contributing to a positive emotional state and potentially influencing mood regulation. Similarly, during instances of stress or sadness, calming or comforting music choices were offered, aiding in emotional regulation and potentially mitigating negative affective states.

Despite these promising results, several limitations and areas for improvement exist. Firstly, the accuracy of emotion detection could be further enhanced through the incorporation of advanced machine learning algorithms capable of more nuanced emotion recognition. Additionally, expanding the range of physiological sensors and refining their calibration could provide deeper insights into emotional states. Furthermore, incorporating user feedback mechanisms to refine music selection algorithms based on individual preferences and context could improve the overall user experience. Overall, the integration of webcam-based facial recognition with physiological sensors and dynamic music selection presents a compelling avenue for the development of emotion-sensitive systems with various applications in human-computer interaction, mental health monitoring, and entertainment.

Conclusion

In conclusion, the integration of webcam, Arduino Uno, temperature sensor, skin response sensor, heart rate sensor, and pulse sensor offers a promising avenue for emotion detection and personalized music generation. Through this innovative technology, individuals can gain insights into their emotional states in real-time, allowing for enhanced self-awareness and potential applications in various fields such as mental health, entertainment, and human-computer interaction. The ability to adapt music selection based on detected emotions adds a dynamic and immersive dimension to the user experience, fostering emotional well-being and engagement. As advancements continue in sensor technology and signal processing algorithms, the potential for emotion-sensing systems to become more accurate, accessible, and seamlessly integrated into daily life is considerable. This research paves the way for further exploration and development in the field of affective computing, offering exciting possibilities for improving human-machine interactions and emotional experiences in the digital age. Ultimately, with continued innovation and collaboration, emotional sensor systems have the potential to revolutionize how we understand and interact with human emotions, paving the way for more empathetic and responsive technology in the years to come.

References

[1] https://encyclopedia.pub/entry/42080 [2] https://www.mdpi.com/1424-8220/23/5/2455 [3] https://scholar.google.co.in/scholar?q=emotional+sensor+research&hl=en&as_sdt=0&as_vis=1&oi=scholart#d=gs_qabs&t=1711630743149&u=%23p%3D-sMalU9VoDMJ [4] https://scholar.google.co.in/scholar?q=emotional+sensor+research&hl=en&as_sdt=0&as_vis=1&oi=scholart#d=gs_qabs&t=1711630851076&u=%23p%3D6dNytgW40-4J [5] https://link.springer.com/article/10.1007/s11042-020-09576-0 [6] https://onix-systems.medium.com/emotion-sensing-technology-in-the-internet-of-things-b186eb07fc5d [7] https://sloanreview.mit.edu/article/how-emotion-sensing-technology-can-reshape-the-workplace/ [8] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7037130/ [9] https://www.electronicsforu.com/technology-trends/applications-benefits-emotion-sensing-technology [10] https://www.engineersgarage.com/measuring-spikes-in-stress-levels-using-a-galvanic-skin-response-sensor-and-arduino/ [11] https://www.mordorintelligence.com/industry-reports/emotion-detection-and-recognition-edr-market

Copyright

Copyright © 2024 Sneha Gupta, Juideepa Das, Komal Gupta, Omkar Bholankar, Prathamesh Date, Prof. Shakti Kaushal. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59658

Publish Date : 2024-03-31

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online